Publications

2020

[New] Use the Force, Luke! Learning to Predict Physical Forces by Simulating Effects

Kiana Ehsani, Shubham Tulsiani, Saurabh Gupta, Ali Farhadi, Abhinav Gupta

CVPR, 2020

pdf project page abstract bibtex

[New] Intrinsic Motivation for Encouraging Synergistic Behavior

Rohan Chitnis, Shubham Tulsiani, Saurabh Gupta, Abhinav Gupta

ICLR, 2020

pdf project page abstract bibtex

[New] Discovering Motor Programs by Recomposing Demonstrations

Tanmay Shankar, Shubham Tulsiani, Lerrel Pinto, Abhinav Gupta

ICLR, 2020

pdf abstract bibtex

[New] Efficient Bimanual Manipulation using Learned Task Schemas

Rohan Chitnis, Shubham Tulsiani, Saurabh Gupta, Abhinav Gupta

ICRA, 2020

preprint abstract bibtex video

2019

Object-centric Forward Modeling for Model Predictive Control

Yufei Ye, Dhiraj Gandhi, Abhinav Gupta, Shubham Tulsiani

CORL, 2019

pdf project page abstract bibtex

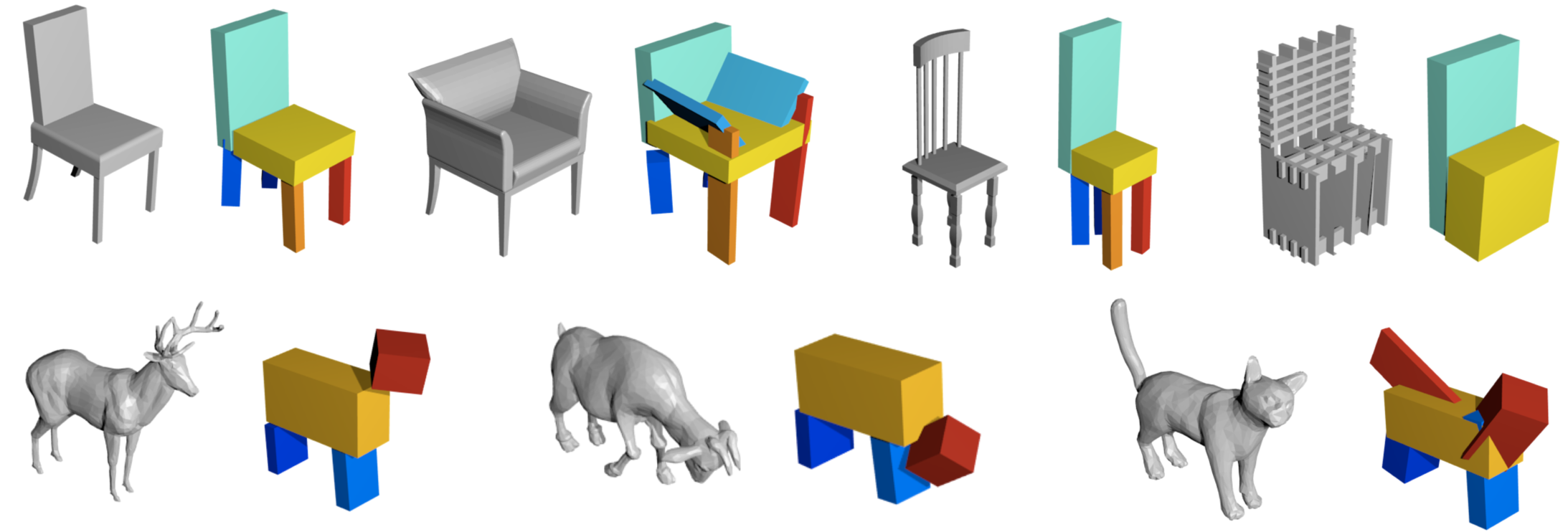

3D-RelNet: Joint Object and Relational Network for 3D Prediction

Nilesh Kulkarni, Ishan Misra, Shubham Tulsiani, Abhinav Gupta

ICCV, 2019

pdf project page abstract bibtex code

Order-Aware Generative Modeling Using the 3D-Craft Dataset

Zhuoyuan Chen*, Demi Guo*, Tong Xiao*, et. al.

ICCV, 2019

pdf abstract bibtex

Multi-view Supervision for Single-view Reconstruction via Differentiable Ray Consistency

Shubham Tulsiani, Tinghui Zhou, Alexei A. Efros, Jitendra Malik

TPAMI, 2019 (journal version combining DRC and MVC)

pdf abstract bibtex code

Hierarchical Surface Prediction

Christian Häne, Shubham Tulsiani, Jitendra Malik

TPAMI, 2019 (journal version of HSP)

pdf abstract bibtex code

2018

Learning Category-Specific Mesh Reconstruction from Image Collections

Angjoo Kanazawa*, Shubham Tulsiani*, Alexei A. Efros, Jitendra Malik

ECCV, 2018

pdf project page abstract bibtex video code

Multi-view Consistency as Supervisory Signal for Learning Shape and Pose Prediction

Shubham Tulsiani, Alexei A. Efros, Jitendra Malik

CVPR, 2018

pdf project page abstract bibtex code

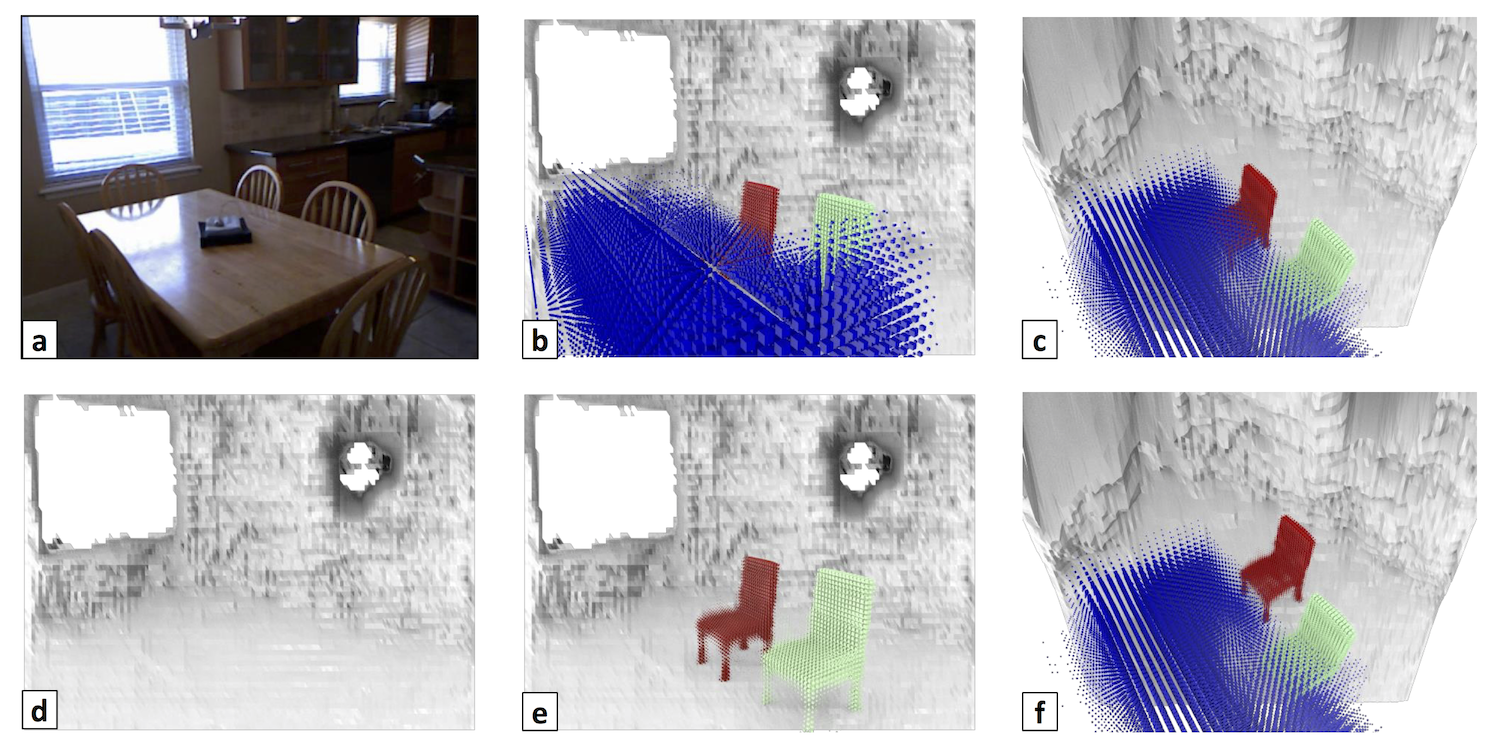

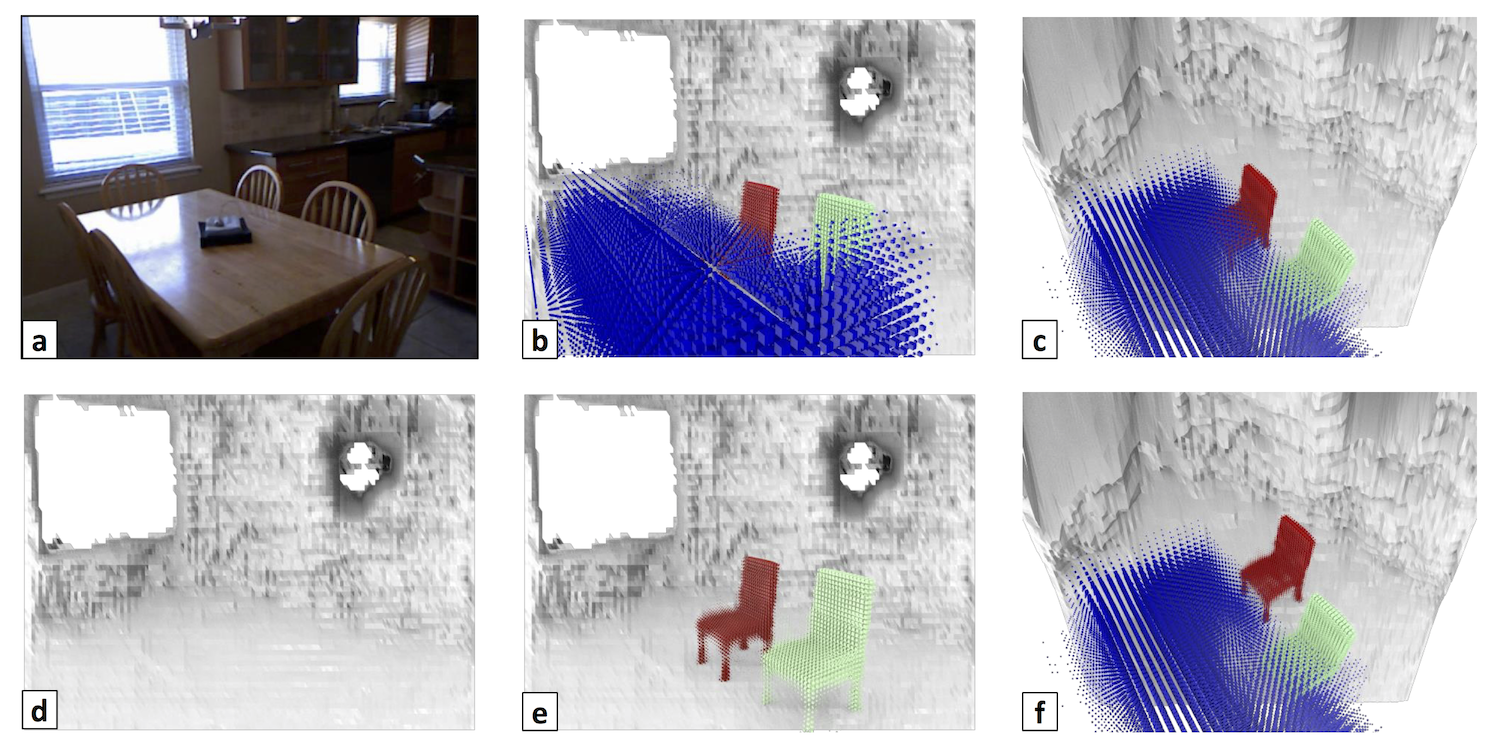

Factoring Shape, Pose, and Layout from the 2D Image of a 3D Scene

Shubham Tulsiani, Saurabh Gupta, David Fouhey, Alexei A. Efros, Jitendra Malik

CVPR, 2018

pdf project page abstract bibtex code

2017

Hierarchical Surface Prediction for 3D Object Reconstruction

Christian Häne, Shubham Tulsiani, Jitendra Malik

3DV, 2017

pdf abstract bibtex slides code

2016

Learning Category-Specific Deformable 3D Models for Object Reconstruction

Shubham Tulsiani*, Abhishek Kar*, João Carreira, Jitendra Malik

TPAMI, 2016

pdf abstract bibtex code

View Synthesis by Appearance Flow

Tinghui Zhou, Shubham Tulsiani, Weilun Sun, Jitendra Malik, Alexei A. Efros

ECCV, 2016

pdf abstract bibtex code

2015

Pose Induction for Novel Object Categories

Shubham Tulsiani, João Carreira, Jitendra Malik

ICCV, 2015

pdf abstract bibtex code

Amodal Completion and Size Constancy in Natural Scenes

Abhishek Kar, Shubham Tulsiani, João Carreira, Jitendra Malik

ICCV, 2015

pdf abstract bibtex

Category-Specific Object Reconstruction from a Single Image

Abhishek Kar*, Shubham Tulsiani*, João Carreira, Jitendra Malik

CVPR, 2015 (Best Student Paper Award)

pdf project page abstract bibtex code note

Virtual View Networks for Object Reconstruction

João Carreira, Abhishek Kar, Shubham Tulsiani, Jitendra Malik

CVPR, 2015

pdf abstract bibtex video code

2013

A colorful approach to text processing by example

Kuat Yessenov, Shubham Tulsiani, Aditya Menon, Robert C Miller, Sumit Gulwani, Butler Lampson, Adam Kalai

UIST, 2013

pdf abstract bibtex